28.03.2025

How to Connect to your GPU dedicated Server

Connecting to a GPU dedicated server from AlexHost is a process consisting of several stages: from choosing the right tariff to setting up and starting work. Below are the main steps for successfully connecting and using the server.

Choosing the right tariff

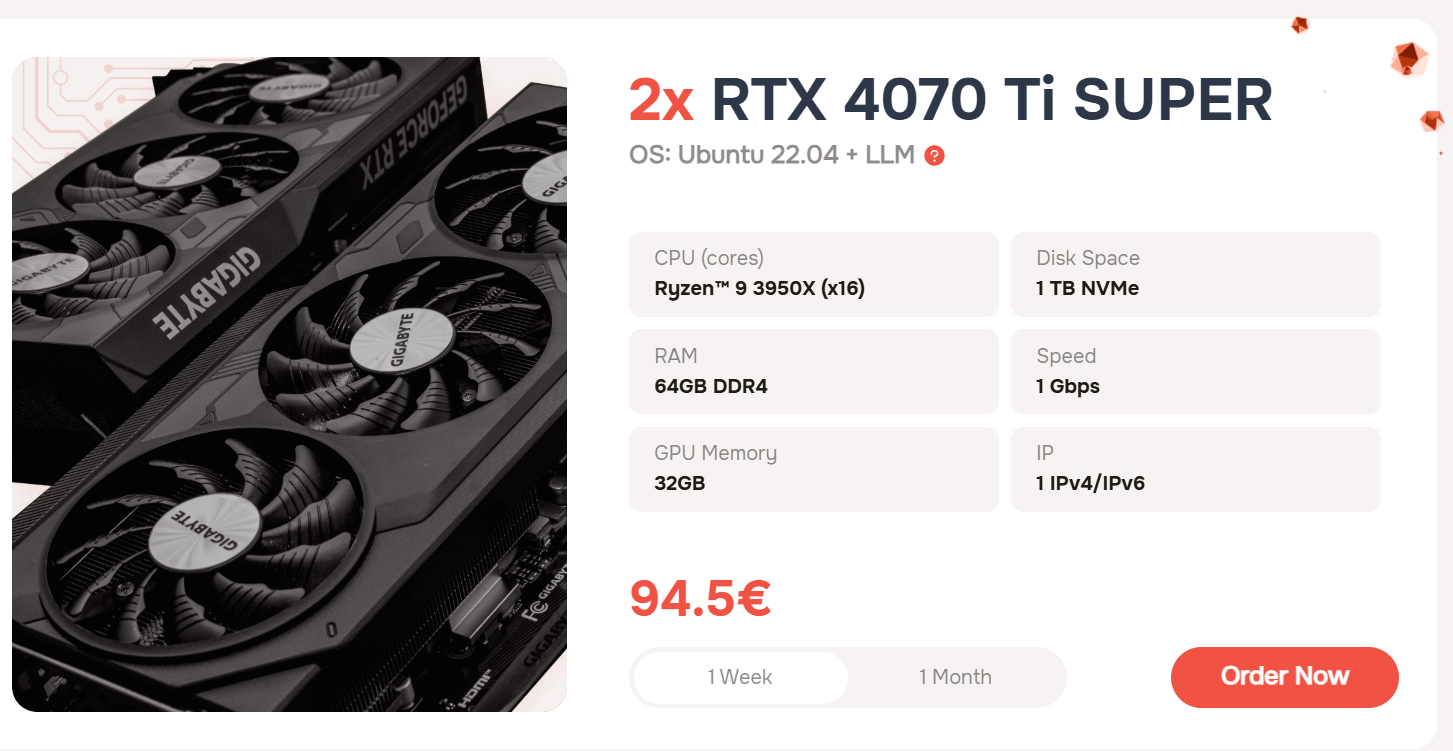

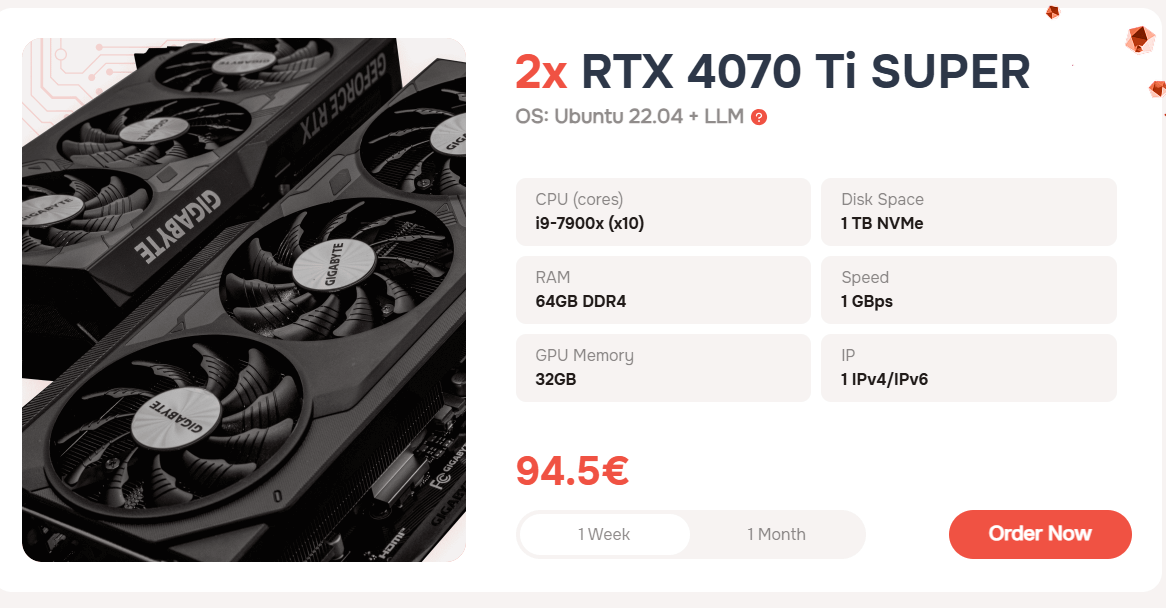

AlexHost offers two tariffs for GPU-dedicated servers:

Dedicated servers are provided with two powerful and modern 2x RTX 4070 Ti SUPER video cards. Another important element is that AlexHost provides a comprehensive set of pre-installed tools and frameworks specifically designed to streamline the deployment and usage of Large Language Models (LLMs), allowing you to start working with AI solutions immediately. This will help you get a server with a deployable AI tool right away.

- Oobabooga Text Gen UI

- PyTorch (CUDA 12.4 + cuDNN)

- SD Webui A1111

- Ubuntu 22.04 VM: GNOME Desktop + RDPXFCE Desktop + RDPKDE Plasma Desktop + RDP

- Also: At the request, we can install any OS

How to Connect to your GPU Server

Upon successful payment, you will receive access to the server and credentials to your email. We are also ready to provide instructions on how you can connect to your GPU dedicated server. The instructions are very simple, just follow the next steps, which are described below.

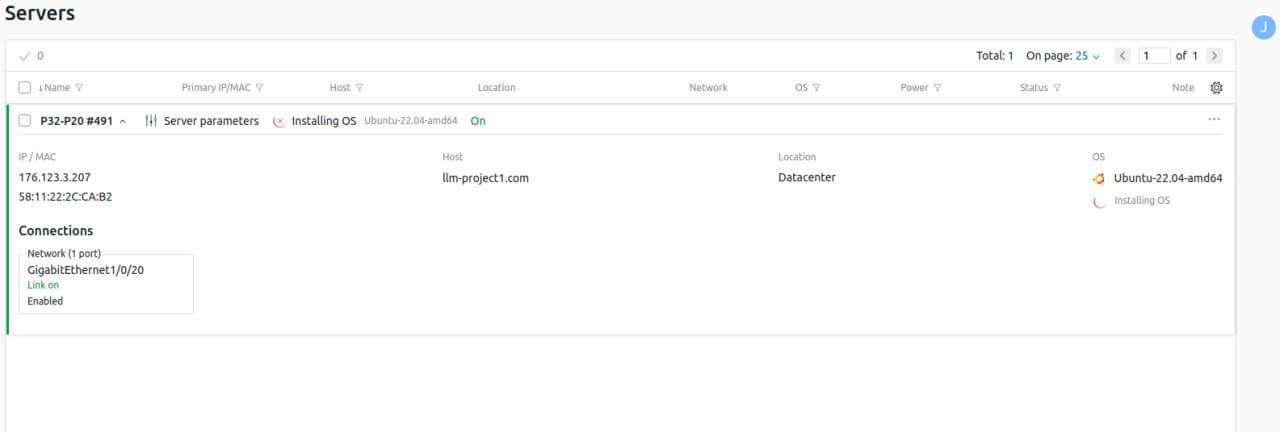

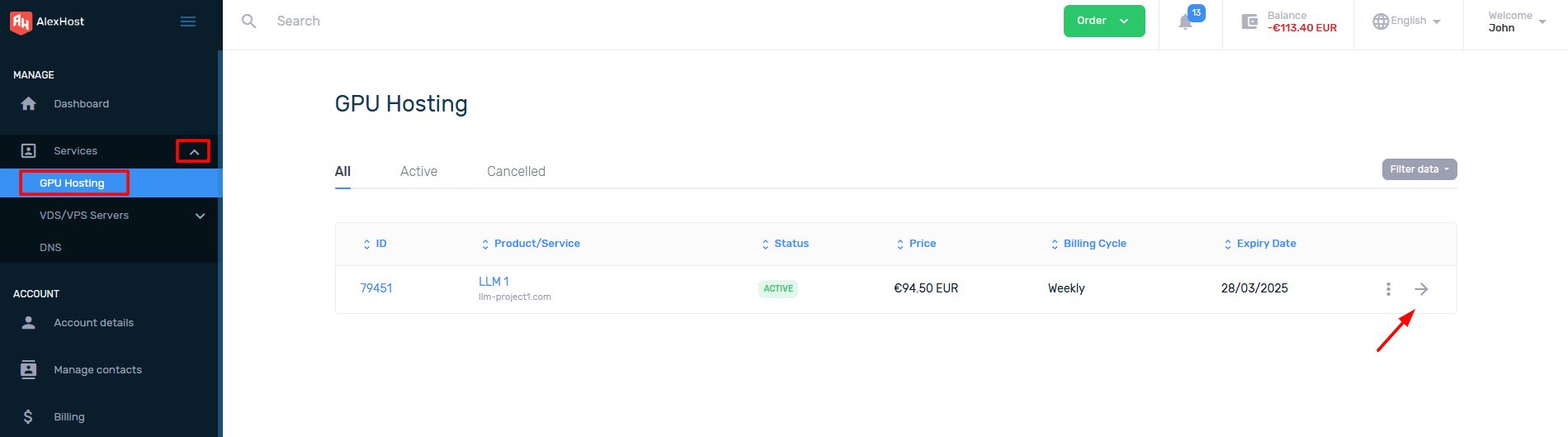

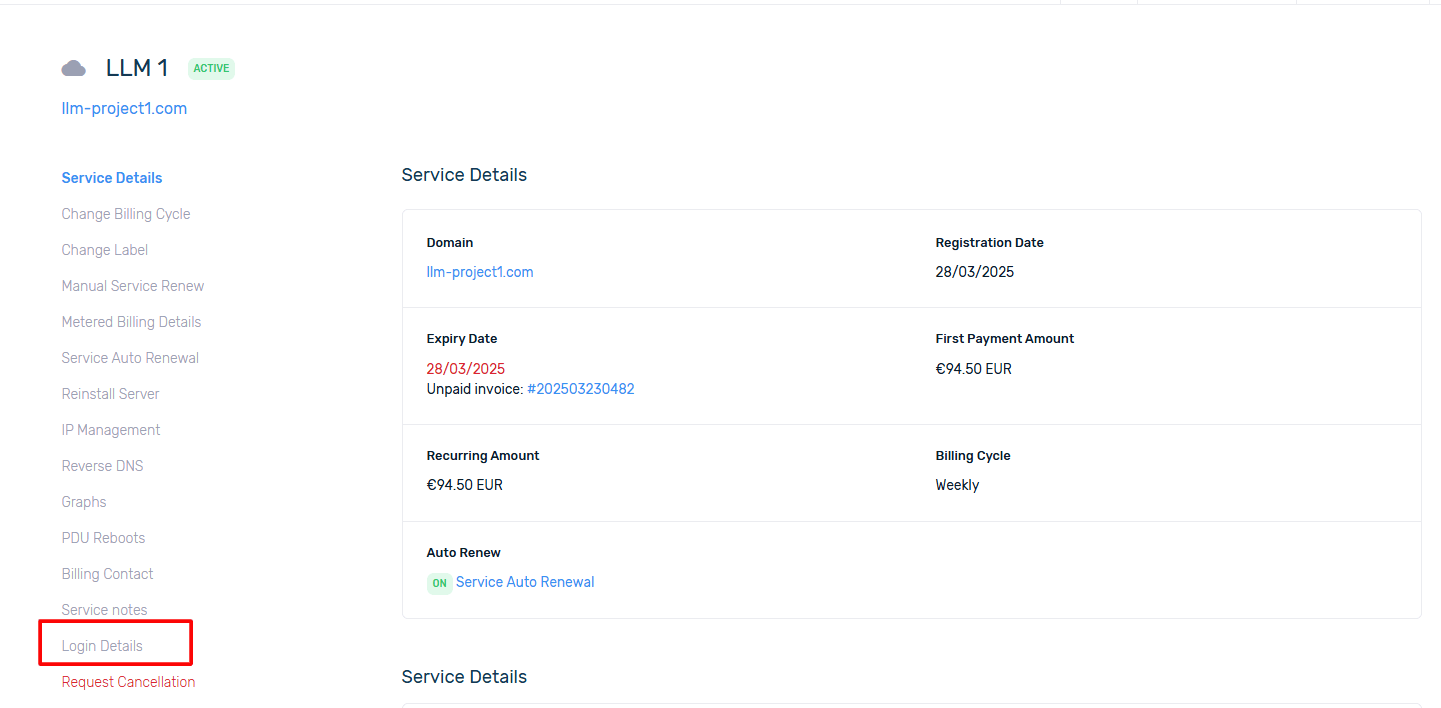

So, after successfully ordering a GPU server, you can go to your account and select it from the list of activated services. Then open it by clicking on the selected service.

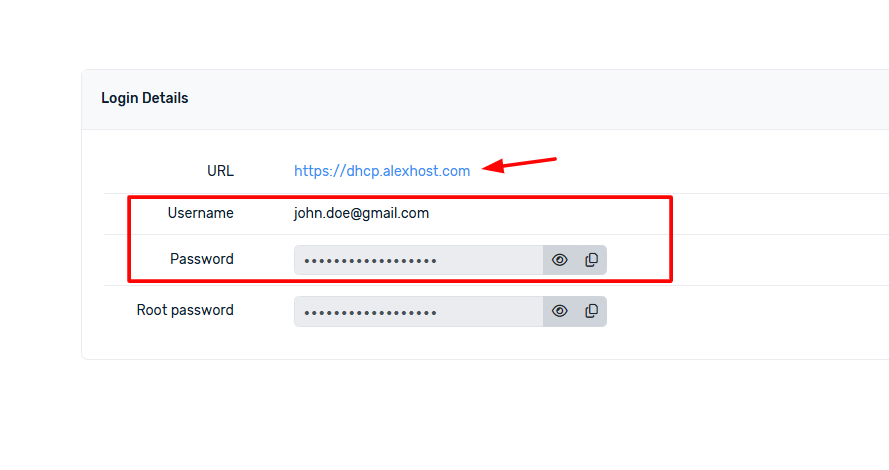

To see the credentials for logging into DCIManager, you need to go to the Login Details section

You will be able to follow the link provided in your account, and also see the Username and the corresponding Password there.

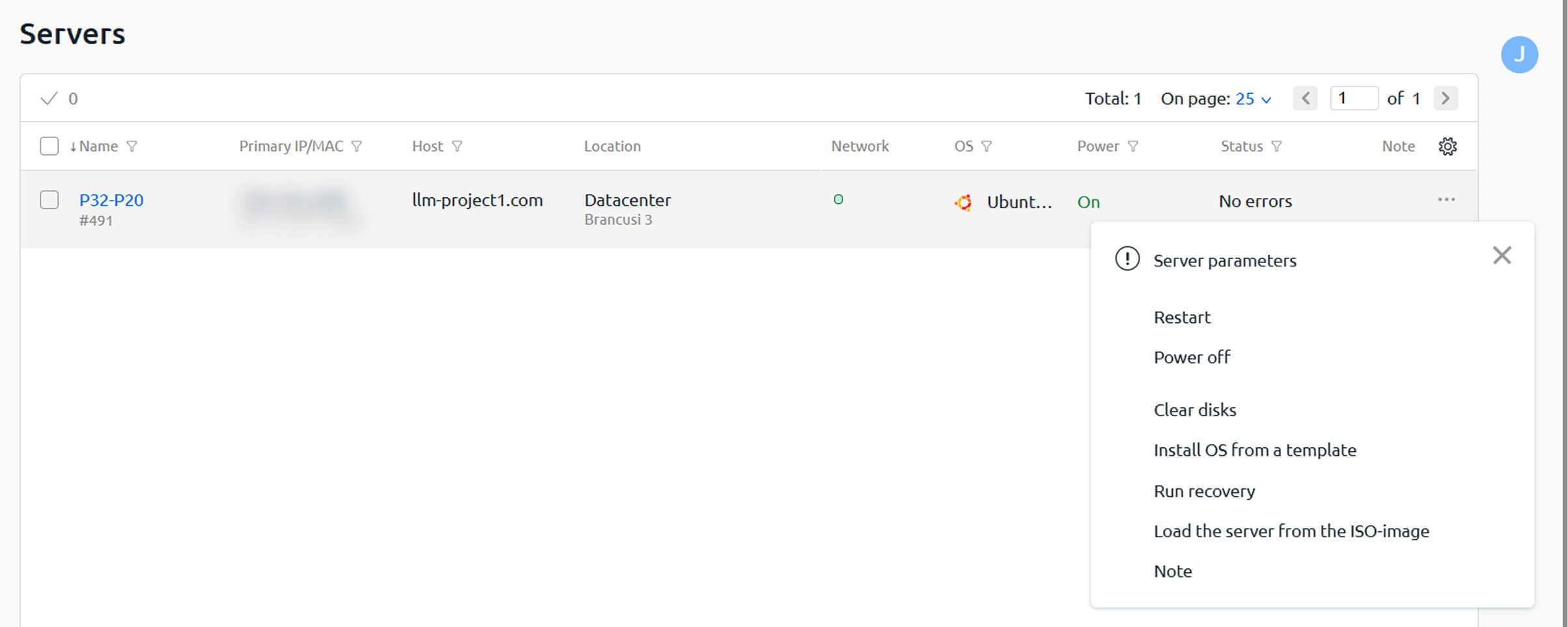

After selecting the three dots, you should then click Install OS from a template to view the available OS and LLM models

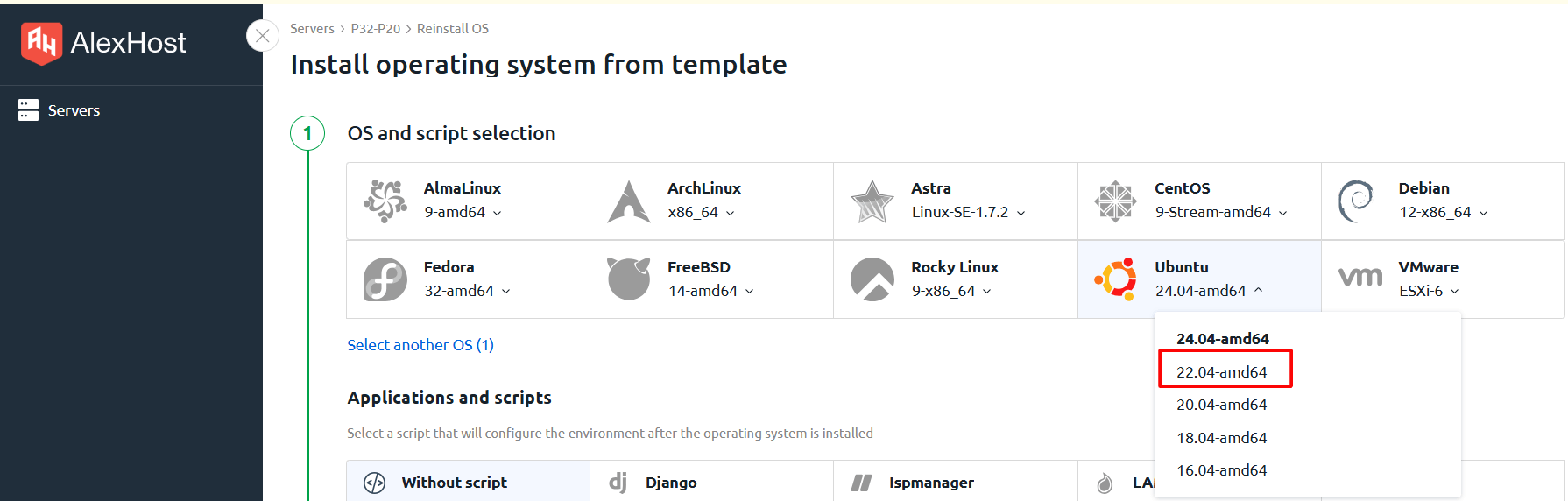

You can install any operating system available in the template list on your GPU server.

Currently, frameworks and tools for working with large language models (LLMs) are only supported on Ubuntu 22.04. When this OS is selected, the corresponding installation scripts for LLM frameworks become available for selection during setup.

Currently, frameworks and tools for working with large language models (LLMs) are only supported on Ubuntu 22.04. When this OS is selected, the corresponding installation scripts for LLM frameworks become available for selection during setup.

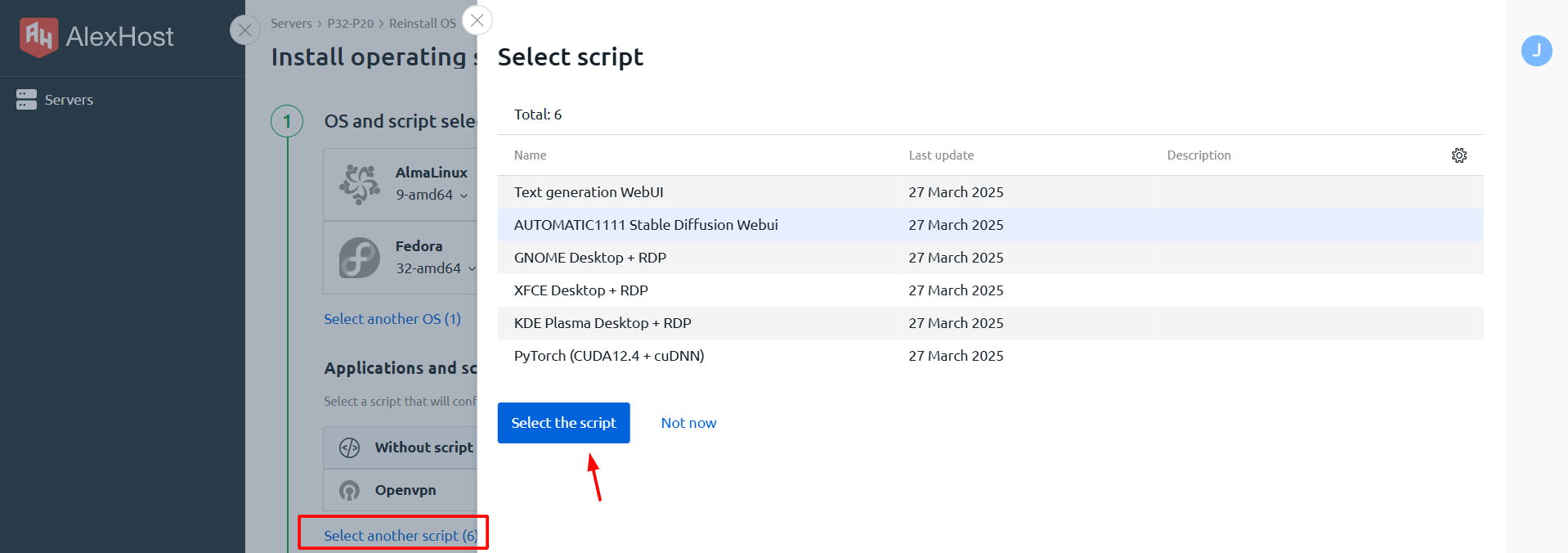

By selecting the Select another scripts option you will have access to install all available LLM models. Let’s take a quick look at each of them:

- Text generation WebUI is a web interface for working with text generation models such as GPT, LLaMA, Mistral and others. It allows you to load models, configure parameters and interact with text LLMs.

- AUTOMATIC1111 Stable Diffusion WebUI is one of the most popular web interfaces for generating images using the Stable Diffusion model. It provides a convenient GUI in which you can load models, configure image generation parameters, use additional plugins and extensions.

- GNOME / XFCE / KDE Plasma Desktop + RDP are various graphical environments for remote connection to the server via RDP (Remote Desktop Protocol). They are not directly related to LLM, but can be used to manage and work with the server on which AI models are running.

- PyTorch (CUDA12.4 + cuDNN) is a library for working with neural networks and machine learning. It supports GPU acceleration (via CUDA) and is used to train and run LLMs (e.g. GPT, LLaMA) and image generation models (Stable Diffusion).

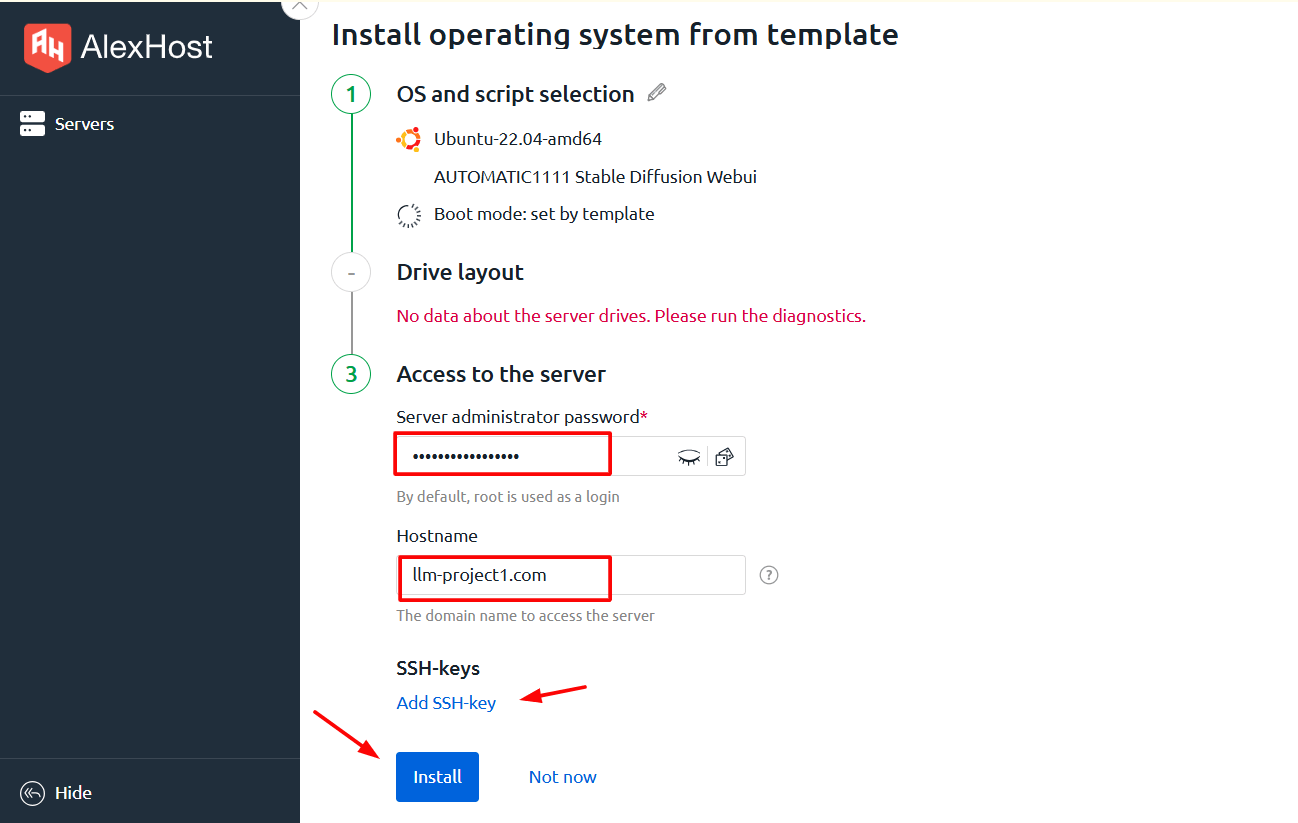

The final step will be choosing a password and hostname on your part. After you fill in these fields – you can continue with the installation.

A successful installation process looks exactly like this. Please note that the installation process may take up to 30 minutes.